Want to know more?

Are you interested in this project? Or do you have one just like it? Get in touch. We’d love to tell you more about it.

On 23 July 2024, the platform team responsible for managing machine learning operations (MLOps) at the John Lewis Partnership went live with an AI Chat bot that supported the move to the Partnership’ impressive new office at 1 Drummond Gate, Pimlico. The bot – nicknamed Drummie – was made available through Google Chat and provided all head office members of staff (Partners) with the functionality to ask direct questions and get detailed information back about the office move.

The timeliness of the data and the fact that it supported all Partners who work in the head office meant that uptake was extensive – people used it, and loved it. Drummie was credited with making the complex logistics of a major head office move much less stressful for those involved, and similar applications of generative AI are now in demand for new use cases across the business.

adoption within a week of launch

Questions answered by Drummie

of queries were answered in seconds

The John Lewis Partnership is one of the UK’s oldest, largest and most popular retailers; it includes John Lewis and Waitrose and is the largest employee-owned business in the UK.There are 34 John Lewis stores across the UK, and johnlewis.com; with 329 Waitrose stores across the UK, and waitrose.com. It has total trading sales of £12.4 billion, a workforce of 74,000 Partners (employees).

In early 2024, the John Lewis Partnership announced that they were relocating their London Head Office. The move promised a transformative shift with a new building, innovative workspace design, and modernised work practices. However, the volume of information surrounding the move posed a challenge: how could Partners stay informed amidst all the changes? And how could the facilities team efficiently address the influx of enquiries from staff?

JLP’s internal comms team was looking for innovative solutions within the MLOps Generative AI toolkit to enhance Partner engagement and streamline responses to enquiries regarding the relocation. The current process, reliant on a single comms team member managing a deluge of Partner emails, was proving unsustainable.

Luckily, JLP’s data engineering partner Equal Experts already had a machine learning specialist exploring the large language model (LLM) capabilities offered by Google Vertex. The focus was on developing a chatbot designed to field a broad spectrum of user queries via Google Chat. With the requisite content readily available on the intranet, the primary challenge lay in optimising response generation, defining the chatbot’s personality, and establishing clear parameters for handling out-of-scope requests from Partners.

As the relocation drew near, a sense of urgency propelled the team to convene with representatives from the Drummond Gate Facilities and Internal Comms Teams. Their mission: to harness the innovative capabilities recently developed by the MLOps Platform Team and deploy a solution that was both effective and user-friendly, ensuring seamless adoption by Facilities and Comms Partners who were unfamiliar with this cutting-edge technology.

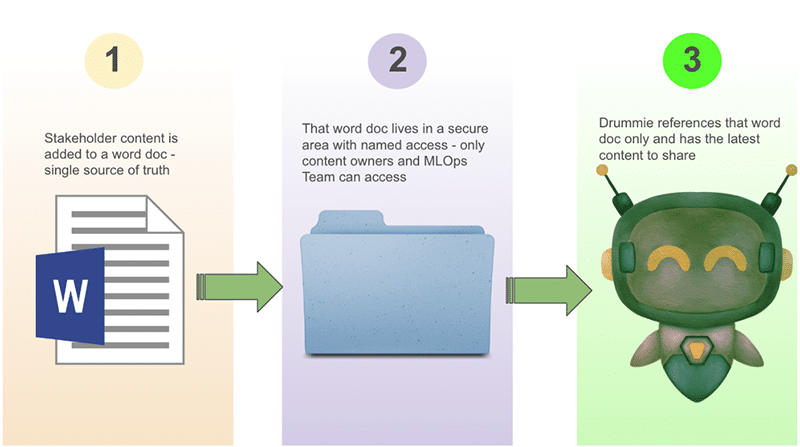

While the front end was easy enough to use for content owners and end users alike, the mechanics in the background required more focus:

Our approach involved iterating quickly, to ensure we understood the best parameters, with frequent system testing against a well-defined set of Q&A.

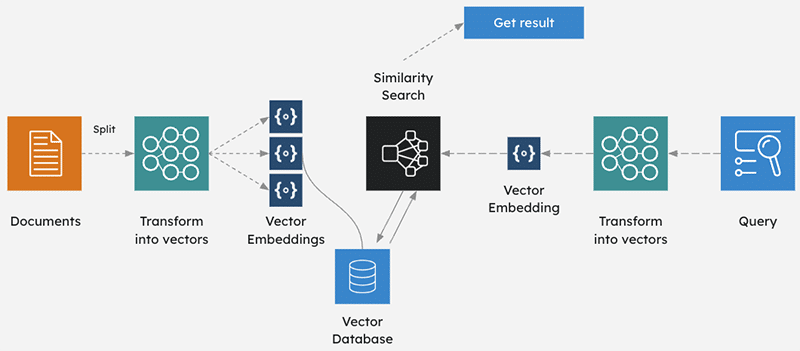

Large Language Models possess foundational knowledge derived from their training data. However, this inherent knowledge does not encompass specific contextual information, such as the JLP Partner migration from one location to another. To equip the LLM with this new knowledge, techniques like Fine-Tuning and Retrieval-Augmented Generation (RAG) are essential.

Fine-tuning, while powerful, presents challenges; we addressed these by using transfer learning, which leverages a pre-trained model’s knowledge base, adapting it to new tasks with smaller, focused datasets. Additionally, techniques like regularisation and dropout helped prevent overfitting, ensuring the model coped well with unseen data. Although potentially more expensive, this approach often yields exceptional results.

Retrieval-Augmented Generation further enhances LLMs by dynamically integrating external knowledge sources with the model’s generative capabilities. This empowers the LLM to access and leverage relevant information in real-time, leading to more accurate and contextually rich responses.

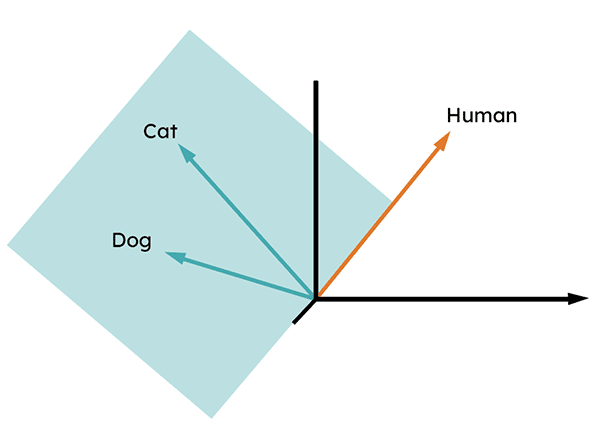

Given a user question, the system needs to retrieve the most relevant documents. This is done, because the previous steps have transformed the documents (and the user queries in real-time) in numerical vectors:

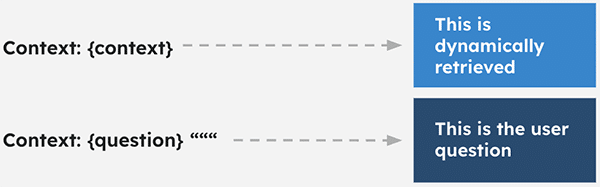

A Good Prompt is all you need

Writing the right prompt is the difference between successfully retrieving the information you need with the context you desire and generating random references to the data you want. We used commands like this to train the LLMs:

“You are an AI assistant that provides detailed and accurate answers based on retrieved information. Below is the context and the user’s question. Use the context to generate a comprehensive and precise answer. If the context is not enough, say that you don’t know the answer.”

Drummie was launched in the Partnership in July 2024 and user feedback was incredibly positive:

Since Drummie’s successful deployment, the MLOps product team has seen a surge of interest, receiving numerous proposals for new use cases. Some of these mirror Drummie’s Q&A format but require integration with diverse content and media types. Others, particularly those from the supply chain team, present more intricate challenges, seeking to leverage Generative AI’s potential to revolutionise logistics and tracking processes.

JLP teams are currently prioritising high-impact Generative AI opportunities, empowering the MLOps team to focus on delivering innovative Gen AI solutions that unlock tangible business value for the John Lewis Partnership.

Are you interested in this project? Or do you have one just like it? Get in touch. We’d love to tell you more about it.