For a technical, in-depth exploration of non-determinism in LLMs, read Adam’s article ‘Non-Determinism in LLMs: Why Generations Isn’t Exactly Reproducible‘

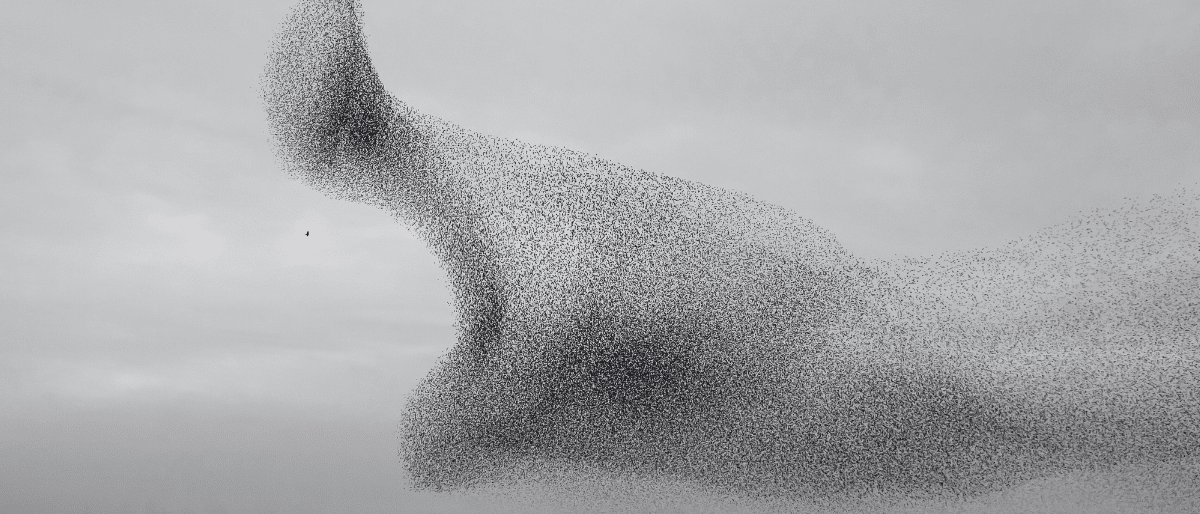

AI systems are designed to provide insightful responses, yet even when you ask the same question twice, the answers can differ slightly. This behavior, known as non-determinism, is not a flaw but a natural consequence of how these models operate.

LLMs generate text by taking your input, turn these into a series of numbers that travel several layers of a neural network that uses an “attention mechanism” to decide which parts of the input matter most. Step by step, the model predicts the next likely piece of text, adds it to what you’ve given, and repeats this process to build a full response.

Even though controllable settings help guide the output consistency, they can’t completely prevent slight differences from appearing. This mix of careful design and natural randomness is where the promise of perfect consistency meet practical limits.

Parameters Affecting AI Output

Several key settings influence how an AI model decides on its answers:

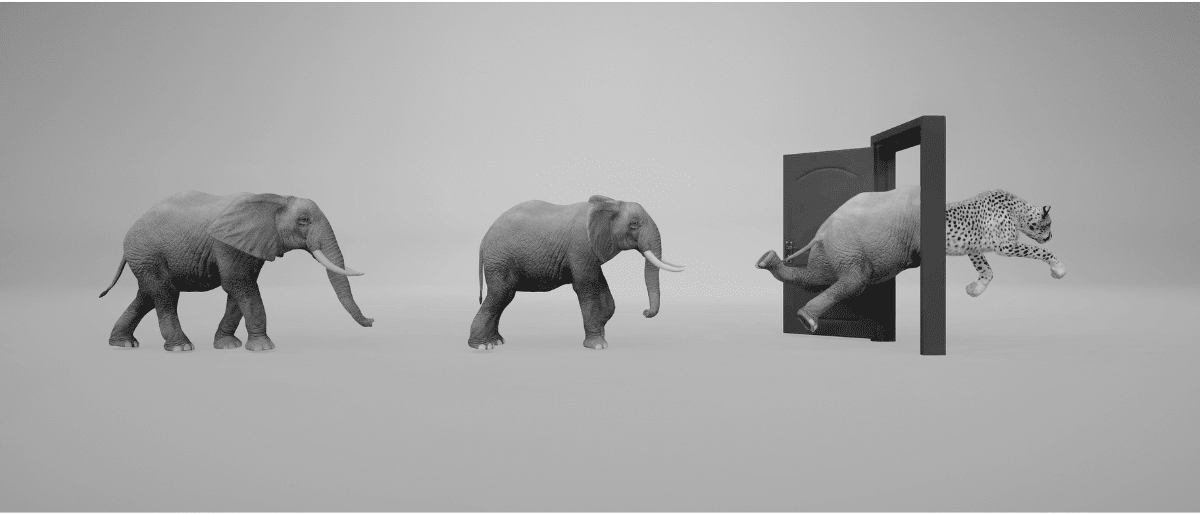

- Temperature: This parameter controls the randomness in the decision-making process. A lower temperature makes the model more conservative and predictable, while a higher temperature allows for more variety and creativity in its responses.

- Random Seed: A random seed sets the starting point for generating random numbers.

- Top-p Sampling: This method limits the selection to a subset of the most likely options. Even within this subset, minor differences in probability can lead the model to choose different words or phrases, contributing to varied outcomes.

These settings work together to balance consistency and creativity in AI outputs, ensuring that the model can both provide reliable information and explore alternative perspectives.

The Computational Challenge

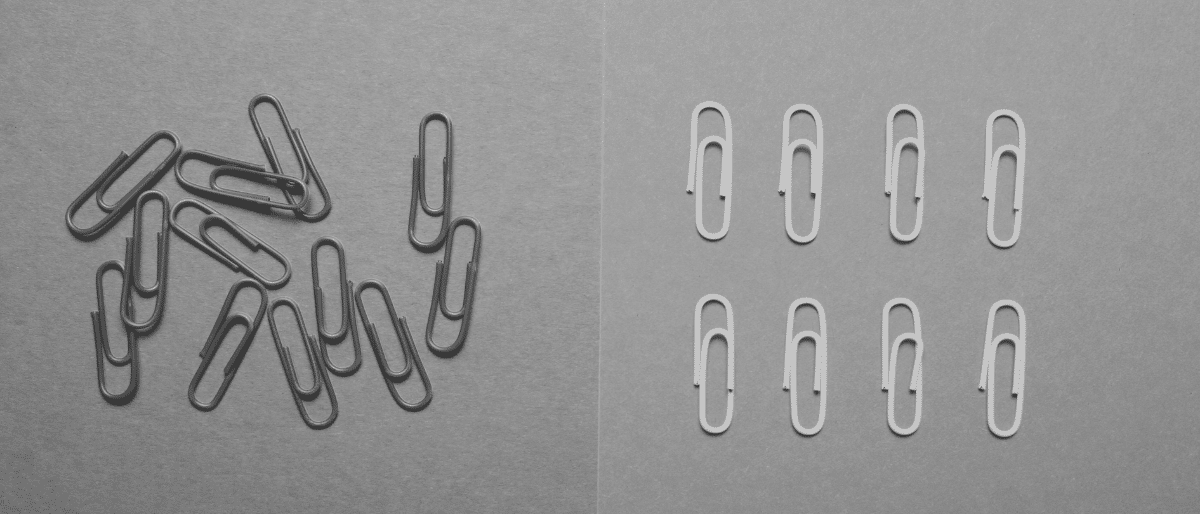

At its core, an AI model follows strict mathematical principles, yet the real-world execution of these calculations introduces some variability. LLMs use numbers with decimals comes with precision limits that can introduce tiny rounding errors during calculations.

Furthermore, AI models run on powerful hardware—often using graphics processing units (GPUs) to perform many operations simultaneously. This parallel processing can lead to slight differences in the order of operations. When you combine these small rounding errors with the way tasks are split across numerous processing threads, even the tiniest discrepancy can be amplified, resulting in slightly different outputs each time.

Why Perfect Reproducibility Remains Elusive

Despite our best efforts to control randomness with fixed seeds and sampling methods, achieving exact repeatability in AI responses is extremely challenging. The combined effects of parameter settings and the inherent limitations of computer arithmetic mean that every run of a model might produce a unique result. While these differences are usually minor and don’t impact the overall quality of the answer, they serve as a reminder that AI operates within the bounds of complex, real-world computing.

Embracing Variability in AI

Understanding that variability is an inherent part of AI operations helps set realistic expectations. Instead of viewing these slight differences as errors, it’s more accurate to see them as a natural feature of advanced computational systems. This flexibility allows AI to adapt, provide diverse insights, and ultimately offer richer, more nuanced perspectives. However, iIf exact repeatability is critical for your use case, you need to pay close attention to how your model is operating.

By appreciating the factors that drive non-determinism, users can better interpret AI outputs and leverage them effectively—even when the answers aren’t exactly the same each time.

For a technical, in-depth exploration of non-determinism in LLMs, read Adam’s article ‘Non-Determinism in LLMs: Why Generations Isn’t Exactly Reproducible‘