“Patient presents with shortness of breath, worse when lying flat. Symptoms began three days ago…”

Thousands of healthcare providers translate complex patient conversations into structured medical documentation daily. In emergency rooms, primary care offices, and specialists’ clinics, converting a human conversation into a precise SOAP note remains critical yet time-consuming. While generative AI promises to revolutionise this process, a crucial question remains: How do we know if it’s getting it right?

Medical documentation demands precision. When a doctor records a patient’s complaint that “my chest feels heavy,” even small inaccuracies in documentation could affect future medical decisions. As organisations race to implement AI solutions across industries, including healthcare, the need for structured approaches, including robust evaluation frameworks, stands at the core of success.

In this series, we’ll use medical documentation as a concrete example to demonstrate a systematic approach to tackling a GenAI challenge, with a strong focus on evaluation (Evals).

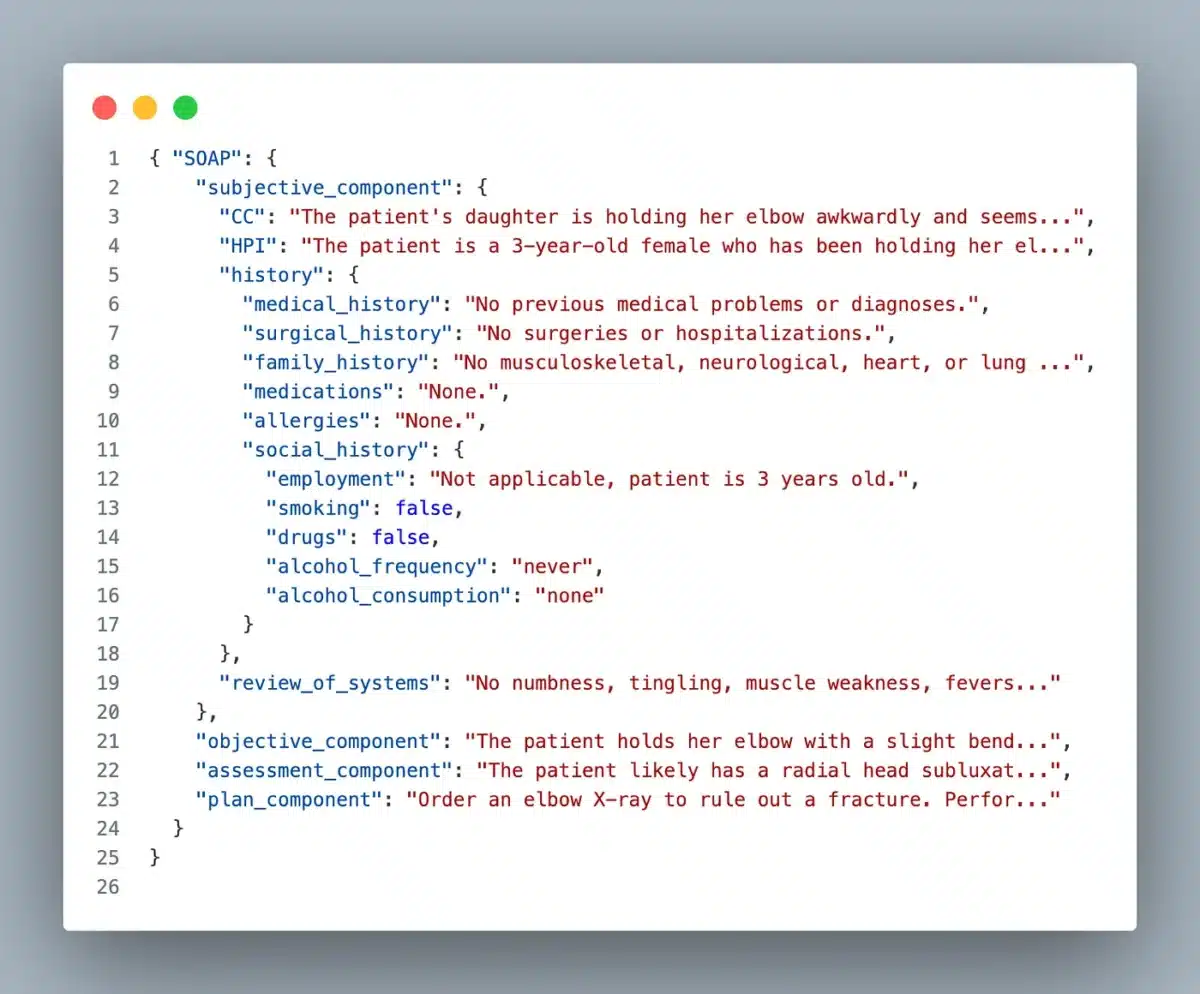

Understanding SOAP notes

Before diving into the evaluation challenge, let’s clarify what a SOAP note is. SOAP is an acronym that stands for:

- Subjective: They describe the patient’s symptoms, complaints, and history.

- Objective: Physical examination findings, vital signs, and test results.

- Assessment: The provider’s diagnosis or clinical impression.

- Plan: Treatment decisions, prescriptions, and follow-up.

While SOAP notes are commonly understood as a four-part structure, hands-on collaboration with practising physicians revealed a more nuanced implementation reality. Production systems account for intricate subsections, specialised terminology patterns, and domain-specific validation emerging requirements from actual clinical workflows.

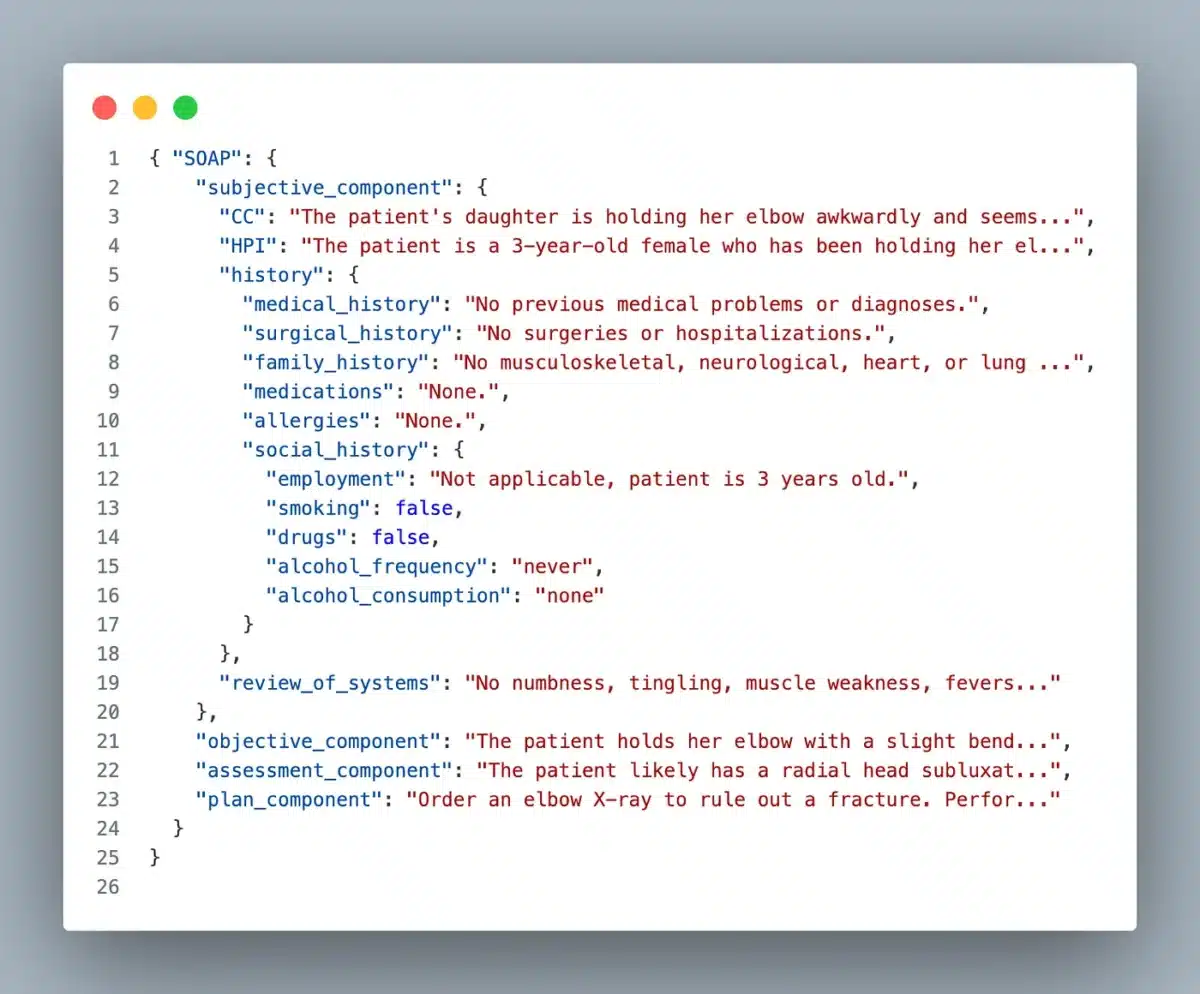

Detailed SOAP structure

The Subjective component is particularly complex, containing several key subsections:

- Chief complaint (CC): The primary reason for the visit, usually in the patient’s own words.

- History of present illness (HPI): A detailed narrative of the current medical issue.

- History: A comprehensive background including surgical history, family history, current medications, allergies, social history/toxic behaviours (smoking, alcohol, drugs).

- Review of systems: A systematic inventory of symptoms across all major body systems.

While the Objective, Assessment, and Plan sections are more straightforward in structure, they require precise medical terminology and clear clinical reasoning.

Here’s an example of this structured format:

This structured format highlights the complexity of our evaluation challenge: our AI system needs to identify and categorise information correctly, maintain proper medical terminology, and ensure completeness across all required sections.

The solution: Augmented documentation with human validation

When implementing AI systems in clinical workflows, the difference between theoretical capabilities and practical success often comes down to thoughtful integration patterns, human-in-the-loop considerations, etc. Rather than attempting to automate medical documentation fully, a solution can take a copilot approach, for instance, generating a SOAP note draft for review.

A production-ready system would require careful consideration of the entire workflow, from speech recognition to EHR (Electronic Health Records) integration and compliance considerations. This series will focus on the generative AI component: converting clinical conversations into structured SOAP notes. This scope allows us to dive deep into the core technical challenges of medical documentation generation.

The starting point: Appointment transcripts

Our exploration assumes we already have accurate transcripts of medical appointments. These transcripts would capture the natural back-and-forth between provider and patient, including:

- Patient descriptions of symptoms

- Provider questions and responses

- Discussion of medical history

- Physical examination findings

- Treatment discussions

Here’s a simplified example:

Doctor: What brings you in today?

Patient: I’ve been having trouble breathing for the past three days.

Doctor: Is it worse at any particular time?

Patient: Yes, especially when I try to lie down flat in bed.

Doctor: I’m going to listen to your lungs now… I can hear some crackling sounds in the lower left side.

Converting this natural dialogue into the structured SOAP note is our target task.

The data challenge

To evaluate any AI system, we need high-quality data. This presents a unique challenge in healthcare due to privacy concerns and the sensitive nature of medical records. However, for our experiment, we’ve found an invaluable resource.

In a scientific article in Nature in 2022 (Fareez at al.), a team of resident doctors and senior medical students across various specialities — including internal medicine, physiatry, anatomical pathology, and family medicine — created a dataset of simulated medical conversations. The focus of the dataset was respiratory cases, but it also contains musculoskeletal, cardiac, dermatological, and gastrointestinal cases.

Using this dataset allows us to:

- Create a low-effort PoC

- Evaluate our system without privacy concerns

- Have access to expert-validated examples

- Test across a range of medical scenarios

- Share results and methodologies openly with the community

This dataset provides an excellent starting point for our evaluation framework. While simulated data has limitations, accessing professionally crafted clinical scenarios gives us a solid foundation for systematically evaluating our AI system’s capabilities.

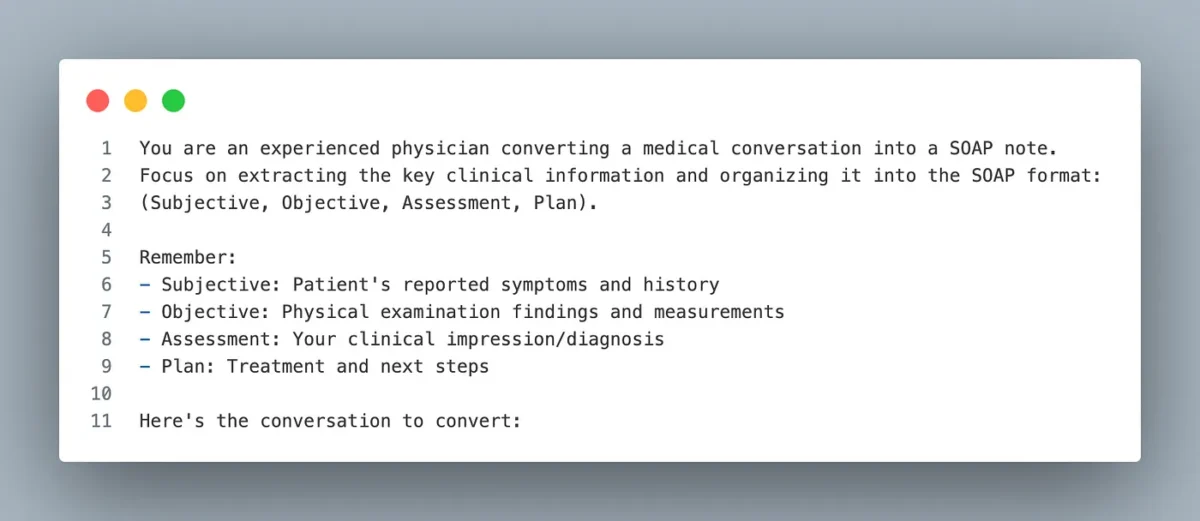

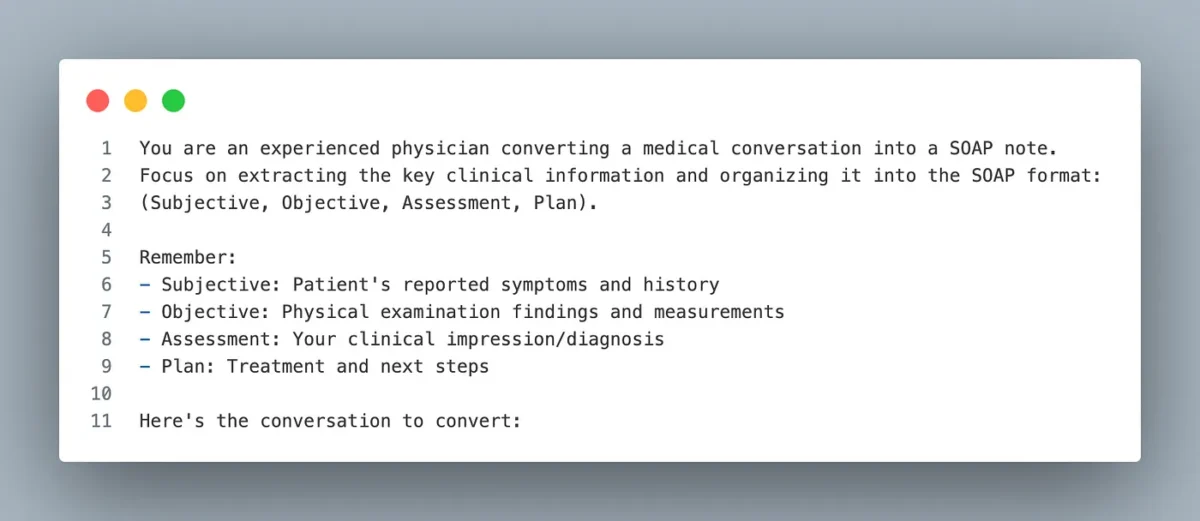

Starting small: A quick proof of concept

Before diving deep into the challenge, let’s start with a simple question: Can GenAI even handle this task at a basic level? Running a quick, low-effort proof of concept can give us valuable insights before investing more time and resources. So the most straightforward test we can do is to write a single and straightforward prompt:

Then, using one of the big models, like GPT-4o or Claude-3.5-sonnet, test with a few conversations and observe the results.

Initial results from the proof of concept revealed promising technical signals that warranted deeper investigation. The system demonstrated foundational capabilities in several critical areas:

- The model seems to understand the basic structure of medical documentation

- It captures key clinical information

- It maintains some level of medical terminology

- The conversion from conversation to structured note makes sense

Based on these results, there’s enough signal to justify investing time in:

- Creating a proper evaluation framework

- Iterating on the solution design

Embracing a systematic approach

We’ve outlined the start of a methodical engineering approach to a complex healthcare challenge. By breaking down our journey into discrete, manageable components, we can tackle the complexity of medical documentation generation with appropriate rigour:

- Domain understanding: Starting with a deep dive into SOAP note structure and real-world requirements by talking with domain experts ensures our technical solution addresses actual clinical needs rather than theoretical use cases.

- Integration strategy: Acknowledging the critical role of human-in-the-loop early in the design process shapes our technical decisions and evaluation criteria.

- Data-First thinking: Identifying and validating data sources before deep technical implementation prevents common pitfalls in healthcare AI deployments. Our use of professionally simulated data provides a robust foundation for systematic evaluation.

- Rapid validation: The low-effort proof of concept is a critical go/no-go decision point. This pragmatic approach saves resources while providing clear signals about technical feasibility.

We’ll dive deep into building an evaluation framework as the next step in an upcoming article. We’ll explore how to systematically assess the quality of generated SOAP notes and establish meaningful metrics. We’ll also focus on iterating the solution since our initial proof of concept, while promising, doesn’t yet generate fully structured notes. By maintaining this methodical approach throughout the series, we’ll develop not just a technical solution but a robust framework for evaluating and improving clinical documentation AI.

While the illustrative use case is for healthcare, the systematic approach we’re developing can serve as a blueprint for implementing GenAI in other domains.